From Prompts to Agents — a DevOps Engineer navigating the AI Landscape

- Haggai Philip Zagury (hagzag)

- Medium publications

- May 5, 2025

Table of Contents

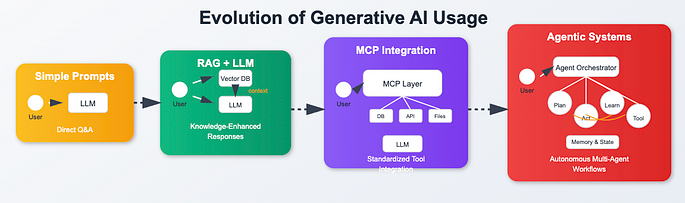

TLDR; A.I is no longer a futuristic concept; it’s fundamentally changing how we work today. For those of us specializing in DevOps, SRE, and platform engineering, this shift presents both incredible opportunities and new challenges. We’ve moved quickly from debating if AI will impact our work to figuring out how to effectively integrate it and adapt our skillsets to stay relevant and valuable.

I’ve personally navigated this rapidly evolving landscape, starting with simple prompt-based AI tools and graduating to more sophisticated “agentic” approaches. In this post, I’d like to share my perspective as a DevOps engineer on this journey, highlighting the differences between AI assistants and agents, and exploring practical applications you can implement today.

Phase 1: The Era of AI Assistants — from Search to “Code Snippet” Helper(s)

Like many, my first foray into AI for coding involved tools focused on assisting with individual tasks. Think of these as the “search prompt” era. Tools like GitHub Copilot, or earlier iterations of Cursor and Cline, primarily function as intelligent code completers and quick knowledge sources.

These tools are fantastic for boosting productivity on isolated coding tasks — generating boilerplate, remembering API calls, or helping with repetitive code patterns. They excel at writing functions, suggesting command flags, or explaining a piece of code you’re looking at right now.

However, their effectiveness often wanes when faced with tasks that require a broader understanding of the entire project, the surrounding infrastructure, or a complex sequence of operations. They are reactive, waiting for your prompt, and typically don’t maintain a persistent context or understand high-level objectives without constant re-prompting. For complex DevOps workflows spanning multiple files, commands, and systems, this “code snippet” approach, while helpful, felt limiting.

Phase 2: Towards the Agentic Approach — Understanding Context and Taking Initiative

This is where the concept of the “AI Agent” comes into play, and I believe it represents a significant step forward, particularly for DevOps professionals.

Unlike assistants that wait for precise prompts for isolated tasks, agents are designed to:

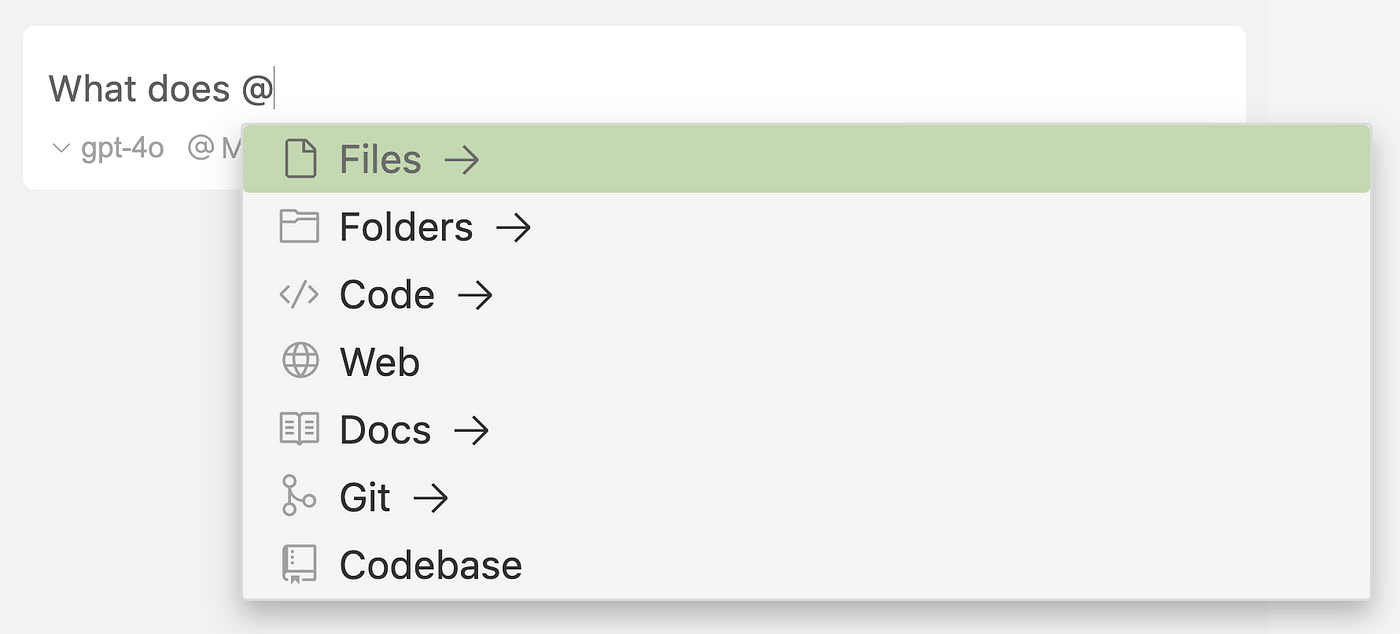

Understand Project Context

Analyze your codebase, configuration files, command history, and potentially even documentation to build a model of your project and environment.

Accept Broader Instructions

You can give them higher-level goals such as “Set up the local development environment,” “Diagnose the failing deployment” — rather than just asking for specific code blocks or commands.

Execute Multi-Step Tasks

They can determine the necessary steps to achieve the goal, execute commands sequentially, analyze the output, and adapt their plan based on results.

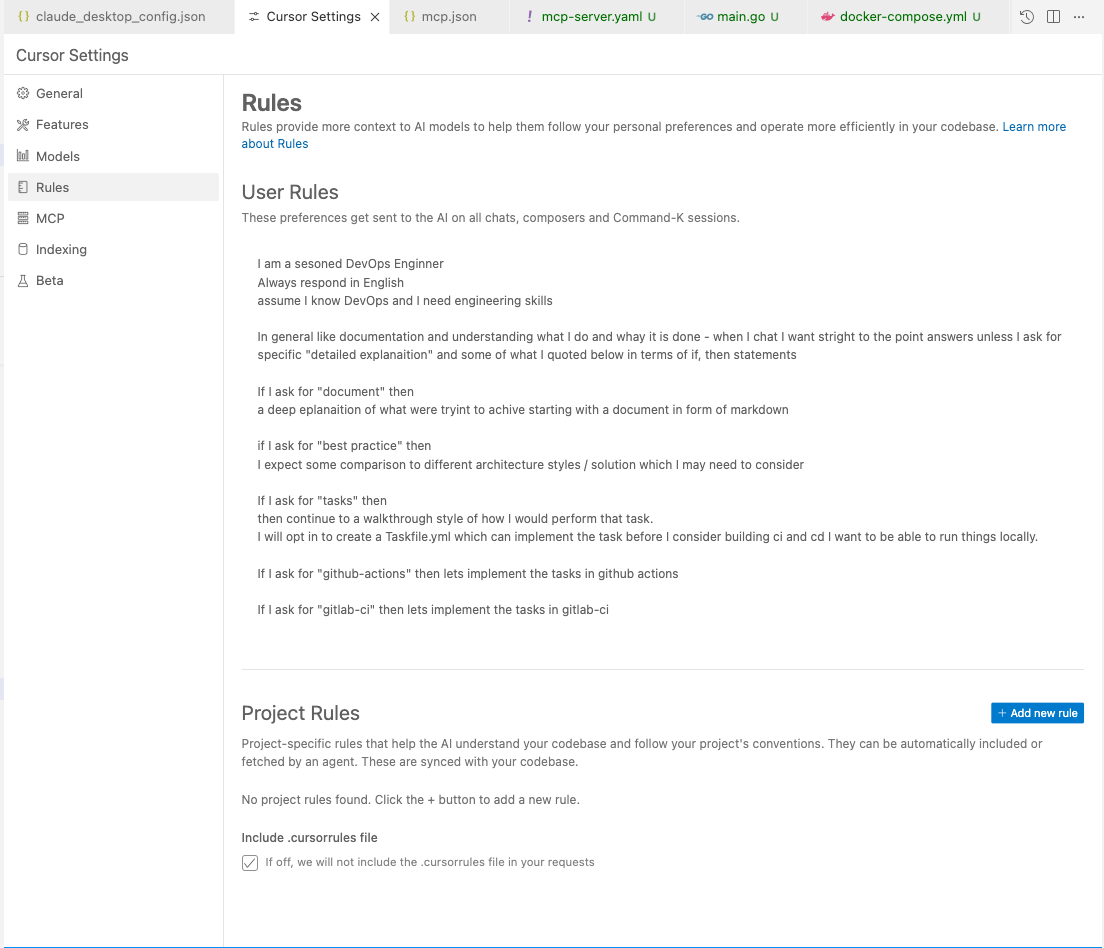

Maintain Memory/Guidelines

They can remember previous interactions, project-specific conventions, and custom instructions you provide.

AI Agent in Action: From Theory to Practice

Let me illustrate this shift from reactive assistance to proactive agency with a real-world scenario that many DevOps engineers face daily.

A Common Challenge: Setting Up a Development Environment

Imagine you’re onboarding a new team member, or you need to replicate a production issue locally. The traditional approach might involve:

- Hunting through documentation for setup steps

- Running multiple commands in sequence

- Troubleshooting environment-specific issues

- Ensuring all configurations match your team’s standards

With an AI assistant, you’d ask for individual commands: “How do I start the database?” or “What’s the command to install dependencies?” Each question requires a separate prompt, and the AI has no memory of your project’s specific requirements.

Enter AI Agents: Context-Aware Automation

This is where tools like Claude Code and similar agentic platforms fundamentally change the game. Instead of asking for individual commands, I can give a high-level instruction: “Set up the local development environment for this project.”

The agent then:

- Analyzes the project structure — examining package.json, docker-compose.yml, README files etc

- Understands the context — recognizing this is a Node.js project with a PostgreSQL database

- Executes multiple steps — installing dependencies, starting services, running migrations

- Adapts to issues — if a port is already in use, it suggests alternatives

- Validates the setup — checking that services are running and accessible

In the past we had to provide a Makefile (or a Taskfile) which did all the above — nowadays an agent can build them on the fly based on context!

The Terminal-First Advantage

Similar to A.I driven shells such as WARP, what immediately resonated with me about Claude Code was its terminal-first approach. While application developers might prefer IDE’s, we DevOps engineers live in the terminal. Having an agent that operates natively in this environment feels natural and eliminates context switching.

When I initialize Claude Code in a project with /init, it doesn’t just start with a blank slate. It:

- Scans the project structure

- Identifies common patterns and tools

- Reads documentation and configuration files

- Creates a foundational understanding in a claude.md file

The Power of Persistent Context

Here’s where the real magic happens. The claude.md file becomes the agent’s “memory”—its understanding of your project. But more importantly, you can edit this file to encode your team’s specific practices:

# Project Guidelines for AI Agent

## Environment Setup

- Always source `.env.local` before running commands

- Use `npm run dev:secure` instead of `npm run dev` for HTTPS

- Database migrations must run before starting the server

## Safety Protocols

- Never run destructive commands without confirmation

- Always backup database before schema changes

- Use staging environment for testing deployment scriptS

Now when I ask the agent to “prepare the environment for testing,” it doesn’t just start the application — it follows our specific protocols, sources the right environment file, and includes our safety checks.

A Practical Example

Let me show you this in action.

Traditional Approach:

# Me: "How do I start the development server?"

# AI: "Run npm start"

# Me: "It failed, the database isn't running"

# AI: "Start PostgreSQL with brew services start postgresql"

# Me: "Now I need to run migrations"

# AI: "Use npx prisma migrate dev"

# ... and so on

Agentic Approach:

# Me: "Set up the development environment"

# Agent analyzes project, checks claude.md guidelines

# - Starts PostgreSQL service

# - Sources .env.local file

# - Installs any missing dependencies

# - Runs database migrations

# - Starts the development server with HTTPS

# - Validates all services are running

# - Reports: "Development environment ready at https://localhost:3000"

The difference is profound: instead of managing individual steps, I’m delegating entire workflows while maintaining control over how they’re executed.

Getting Started: Your First Steps with AI Agents

Ready to make the transition? Here’s how to begin:

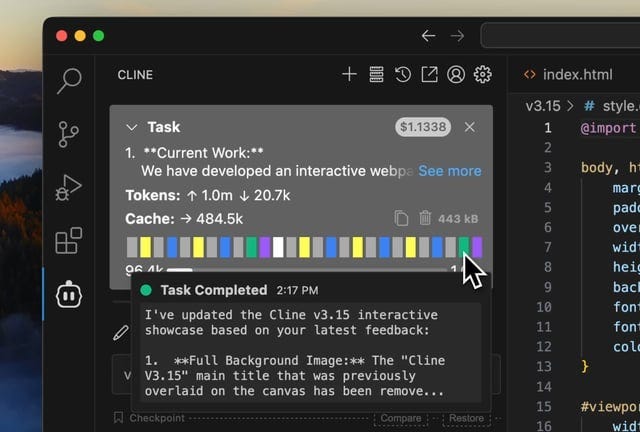

- Choose Your Tool: Start with Claude Code, Cursor, Roo-code or Cline to experiment with agentic approaches they all come with built-in tools like accessing local file system, read/write files, execute commands etc.

- Initialize Your Project: With tools like cluade code use the /init command to let the agent understand your project structure / and create a README.md and instruct the tools to learn what your project is all about.

- Define Your Guidelines: Create or edit the claude.md or spec.mdfile with your team’s specific practices

- Start Small: Begin with simple tasks like “set up development environment” or “run tests”

- Iterate and Refine: Adjust your guidelines based on what works and what doesn’t

What’s Next?

This shift from reactive assistance to proactive agency becomes even more powerful when we integrate it with your broader development ecosystem. In my next article, I’ll explore how Model Context Protocols (MCPs) give agents access to your real development environment — your Git repositories, Kubernetes clusters, and configuration files — transforming them from helpful assistants into genuine operational partners.

The transformation is already underway. The question isn’t whether this will change how we work — it’s whether we’ll lead this evolution or be led by it.

If you find this post informative i am curious to learn

what’s your experience with AI agents in your DevOps practice?

Have you started experimenting with agentic approaches? Share your thoughts in the comments below.*

Next up: Building Production-Ready AI Agent Workflows: MCP Integration and Operational Excellence