Building Production-Ready AI Agent Workflows: MCP Integration and Operational Excellence

- Haggai Philip Zagury (hagzag)

- Medium publications

- May 5, 2025

Table of Contents

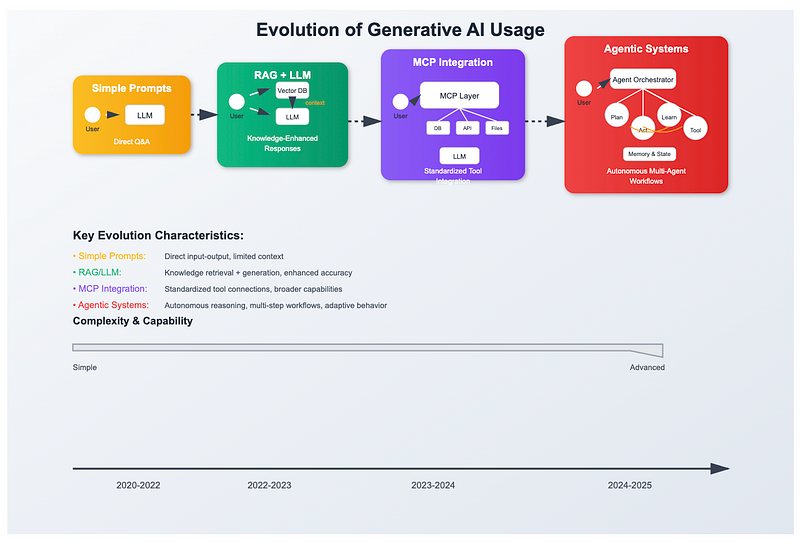

This is Part 2 of our series on AI agents in DevOps. If you haven’t read Part 1: From AI Assistants to AI Agents, I recommend starting there to understand the foundational concepts.

The Integration Challenge

In Part 1, we explored how AI agents can understand project context and execute complex workflows. But there’s a crucial limitation: agents need access to your actual development ecosystem to be truly effective.

Think about your typical DevOps workflow. You’re not just working with code in isolation — you’re:

Checking Git repositories across multiple projects

Monitoring Kubernetes clusters

Reading configuration files scattered across your filesystem

Correlating information from different tools and environments

Traditional AI assistants live in a bubble. They can suggest commands, but they can’t see your actual Git status, inspect your running containers, or analyze your real configuration files. Every interaction requires you to manually provide context: “Here’s my current Git status…”, “My pods are showing this error…”, “This is my configuration file…”

Bridging the Gap: Model Context Protocols (MCP Servers)

This is where Model Context Protocols (MCPs) become transformative. MCPs solve this by creating secure, standardized bridges between AI agents and your development tools.

MCPs: Giving Agents “Eyes” and “Hands”

Instead of being limited to generating suggestions, agents can now:

Inspect Your Real Environment:

Check actual Git repository status across multiple projects

Query live Kubernetes clusters for pod health and logs

Read and analyze configuration files on your filesystem

Access documentation and project metadata

Take Informed Actions:

Make decisions based on current system state, not assumptions

Provide context-aware suggestions that account for your specific setup

Execute commands with full knowledge of your environment

A Practical Example: Environment Auditing

Let me show you the difference MCPs make with a real DevOps scenario. Imagine you want to understand the current state of all your projects before starting your workday.

Without MCPs (Traditional Approach):

# Manual context gathering required

# Me: "Can you help me check the status of my projects?"

# AI: "Sure! Please run 'git status' in each project and share the output"

# Me: [runs commands manually, copies outputs]

# AI: [provides analysis based on provided text]

With MCPs (Agentic Approach):

# Me: "Give me a summary of all my projects' current state"

# Agent with MCP access automatically:

# 1. Scans allowed directories for Git repositories

# 2. Checks status of each repository

# 3. Identifies uncommitted changes, pending merges

# 4. Reports which projects need attention

# Agent: "Found 5 repositories. 2 have uncommitted changes,

# 1 has a pending merge conflict in the auth-service branch."

Configuration: Simple Setup, Powerful Results

Setting up MCPs is straightforward. Here’s a minimal configuration that gives your agent access to three key areas:

{

"mcpServers": {

"filesystem": {

"command": "npx",

"args": ["-y", "@modelcontextprotocol/server-filesystem"],

"env": {

"ALLOWED_DIRECTORIES": "/Users/yourname/projects"

}

},

"git": {

"command": "uvx",

"args": ["mcp-server-git"]

},

"kubernetes": {

"command": "npx",

"args": ["-y", "kubernetes-mcp-server@latest"]

}

}

}

With this setup, when I ask “What needs my attention today?”, the agent can:

- Scan my filesystem to identify active projects

- Check Git status across all repositories

- Query Kubernetes for any failing pods or services

- Synthesize the information into actionable insights

The Multiplication Effect

The real power emerges when MCPs work together with the persistent context we discussed in Part 1. Your claude.md file can now include environment-specific guidelines:

# Project Guidelines:

## Daily Workflow

- Always check staging environment health before deployments

- Flag any repositories with uncommitted changes > 3 days old

- Monitor the payment-service namespace for errors

## Environment Priorities

- Production issues take precedence

- Staging deployments happen only during business hours

- Development branch merges require clean status

Now when the agent performs environment auditing, it’s not just gathering data — it’s applying your specific operational knowledge to prioritize what matters most.

Agents as Operational Guardrails: The New DevOps Feedback Loop

With access to real environments through MCPs, a fascinating possibility emerges: agents can actively help us follow best practices instead of just executing commands.

This shifts agents from being powerful executors to becoming operational partners that embody our team’s knowledge and standards.

From Reactive to Proactive Quality Enforcement

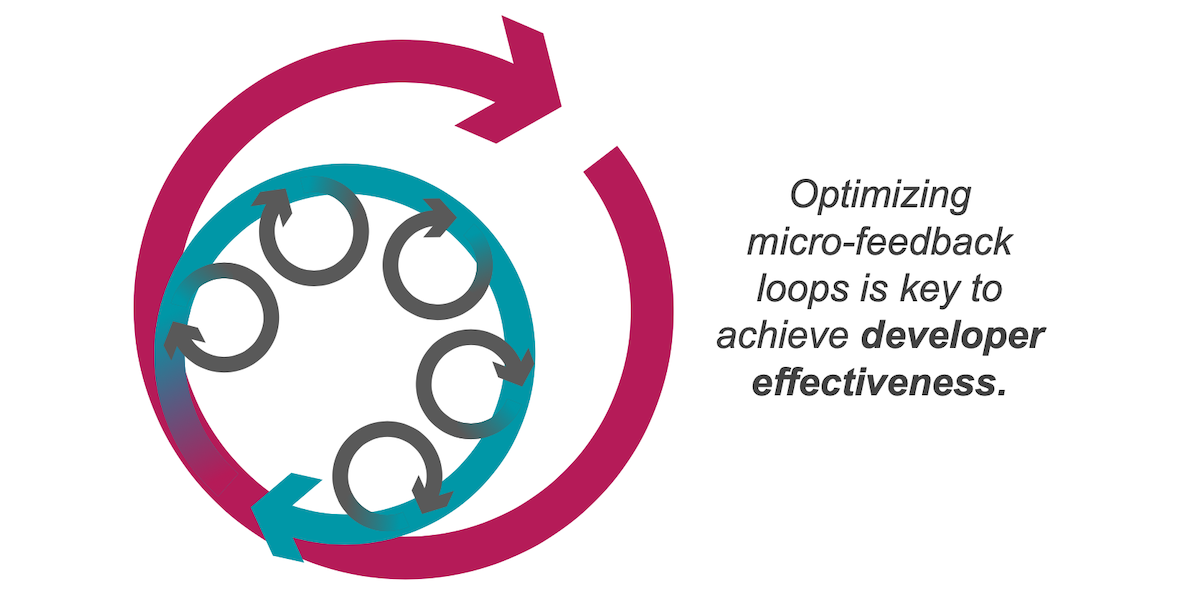

Traditional DevOps relies heavily on post-execution feedback loops. We write code, commit it, and then CI/CD pipelines tell us what went wrong. We deploy to staging, and monitoring systems alert us to problems. This reactive approach works, but it means we often discover issues late in the process.

Agents with project context can create proactive feedback loops that catch issues at the moment of execution, not hours later.

Embedding Operational Knowledge

Here’s where the claude.md file becomes transformative. Instead of just project setup instructions, we can encode our operational wisdom:

# Operational Guidelines

## Deployment Safety Checks

- Never deploy to production on Fridays after 3 PM

- Always verify staging health before production deployment

- Database migrations require explicit approval in production

## Environment-Specific Rules

- Development: Fast iteration, minimal safety checks

- Staging: Production-like verification, automated testing

- Production: Maximum safety, human oversight for destructive operations

## Team Conventions

- Flag any Git repositories with uncommitted changes > 3 days old

- Monitor payment-service namespace for any pod restarts

- Require clean test runs before merging feature branches

Real-Time Operational Coaching

With this context, agents transform from command executors into operational coaches. Here’s how this changes typical workflows:

Traditional Approach:

# Me: "Deploy the payment service to production"

# Agent: [executes deployment commands]

# Later: CI/CD pipeline fails, Slack notifications fire

Agent-Guided Approach:

# Me: "Deploy the payment service to production"

# Agent: "I notice staging has 2 failing tests for payment-service.

# Our guidelines require clean staging before production.

# Should I:

# 1. Check and fix staging issues first

# 2. Deploy anyway with explicit approval

# 3. Show you the failing test details"

Learning from Team Patterns

The most powerful aspect is that these guidelines can evolve based on team experience. When we encounter a production issue caused by a specific oversight, we can encode that lesson into the agent’s operational knowledge:

## Lessons Learned

- Always check Redis cache hit rates before major releases

(Learned from: 2025-01 incident where cache was cleared)

- Verify database connection pool limits during traffic spikes

(Learned from: 2025-03 customer spike that caused timeouts)

This creates a living operational knowledge base that grows with your team’s experience.

Micro-Feedback Loops in Action

Rather than waiting for post-deployment monitoring to catch issues, agents can provide immediate context:

Environment Health Checks: “I see CPU usage in staging has been trending up 40% over the past 2 hours. This might indicate a memory leak in the new feature branch.”

Dependency Awareness: “The auth service was restarted 10 minutes ago. The payment service deployment you’re planning depends on auth — should we wait for auth to stabilize?”

Pattern Recognition: “This is the third time this week someone has deployed the frontend without updating the corresponding API documentation. Should we add doc updates to the deployment checklist?”

Beyond Individual Productivity

This approach scales beyond personal productivity to team-wide operational excellence:

Onboarding New Team Members: New engineers get immediate guidance that embodies months of team experience, not just documentation they might miss.

Reducing Operational Debt: Common oversights get caught automatically, reducing the accumulation of “we should have checked that” issues.

Evolving Best Practices: Teams can experiment with new operational practices and encode successful ones into agent guidance.

The Evolution of DevOps Expertise: From Executor to Orchestrator

This proactive guidance model raises important questions about the changing role of DevOps engineers. If agents can embody our operational knowledge and provide real-time coaching, what does this mean for how we define our value and expertise?

The answer lies not in replacement, but in elevation. Just as we evolved from manually deploying applications to orchestrating CI/CD pipelines, we’re now evolving from executing individual commands to orchestrating intelligent systems.

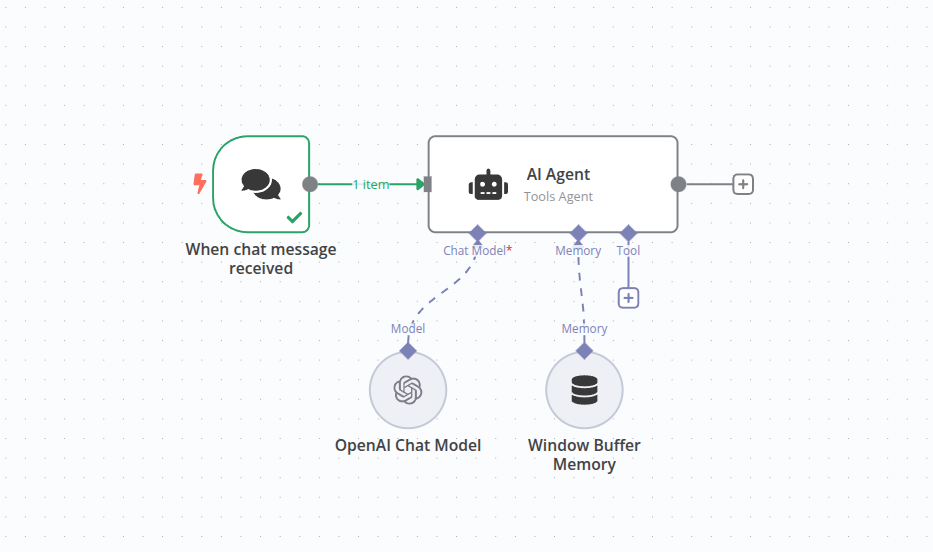

A great example of test driving A.I inside your workflow is running an A.I decision point as part of a business workflow, one of my colleagues shared how they use OpenAi to make decisions within an n8n pipeline …

The New DevOps Skillset

As agents handle more tactical execution, our expertise shifts toward:

Systems Thinking at Scale: Understanding how complex, distributed systems interact becomes more valuable than knowing specific command syntax. Agents can execute the commands, but they need human insight to understand the broader implications.

Operational Strategy: Defining the “what” and “why” behind operations, not just the “how.” This includes capacity planning, disaster recovery strategies, and business continuity planning that requires human judgment and experience.

AI Governance: An entirely new skill emerges — knowing how to effectively configure, constrain, and guide AI agents. This includes understanding their limitations, defining appropriate guardrails, and knowing when human intervention is necessary.

Cross-functional Translation: As agents become more capable, DevOps engineers increasingly serve as translators between technical capabilities and business needs, helping organizations understand what’s possible and what the trade-offs are.

The Competitive Advantage

Organizations that master this transition early will gain significant advantages:

Operational Velocity: Faster deployment cycles, quicker incident response, and more efficient resource management

Knowledge Democratization: Junior engineers can operate with the guidance of senior-level operational knowledge embedded in agents

Innovation Focus: Teams spend less time on repetitive operational tasks and more time on architectural improvements and innovation

Risk Mitigation: Consistent application of best practices reduces human error and operational debt

Looking Forward: The Agent-Native Organization

As we look toward the future, we can envision “agent-native” organizations where:

Infrastructure decisions are informed by AI analysis of usage patterns and performance metrics

Deployment strategies are dynamically adjusted based on real-time risk assessment

Incident response is initiated by agents that can correlate symptoms across multiple systems

Capacity planning incorporates predictive modeling that accounts for business growth patterns

This isn’t science fiction — the foundational technologies exist today. The challenge is organizational: building the culture, processes, and expertise to leverage these capabilities effectively.

If 10 years ago we wrote playbooks to define processes (playbooks were composed of a sequence of tasks) and pipelines or workflows which we designed to execute these sequences of tasks (or DAGs to enable us idempotency) — A.I should be smart enough to build those workflows on the fly.

Conclusion: Embracing the Transformation

The evolution from prompts to agents represents more than a technological shift — it’s a fundamental reimagining of how we approach operations.

The DevOps engineers who thrive in this new landscape will be those who embrace their evolving role as architects, strategists, and orchestrators of intelligent systems.

Our value doesn’t diminish; it transforms. We become the designers of systems that think, the teachers of agents that learn, and the guardians of operations that scale beyond what any individual could manage alone.

The question isn’t whether this transformation will happen — it’s already underway. The question is whether we’ll lead it or be led by it.

What’s your experience with MCP integration in your DevOps practice? How are you preparing for this evolution? Share your thoughts and experiences in the comments below.